From MIT's Technology Review:

Computer scientists have discovered a way to number-crunch an individual’s own preferences to recommend content from others with opposing views. The goal? To burst the “filter bubble” that surrounds us with people we like and content that we agree with.

The term “filter bubble” entered the public domain back in 2011when the internet activist Eli Pariser coined it to refer to the way recommendation engines shield people from certain aspects of the real world.

Pariser used the example of two people who googled the term “BP”. One received links to investment news about BP while the other received links to the Deepwater Horizon oil spill, presumably as a result of some recommendation algorithm.

This is an insidious problem. Much social research shows that people prefer to receive information that they agree with instead of information that challenges their beliefs. This problem is compounded when social networks recommend content based on what users already like and on what people similar to them also like.

This is the filter bubble—being surrounded only by people you like and content that you agree with.

And the danger is that it can polarise populations creating potentially harmful divisions in society.

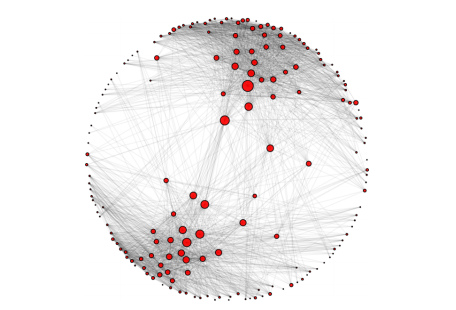

Today, Eduardo Graells-Garrido at the Universitat Pompeu Fabra in Barcelona as well as Mounia Lalmas and Daniel Quercia, both at Yahoo Labs, say they’ve hit on a way to burst the filter bubble. Their idea that although people may have opposing views on sensitive topics, they may also share interests in other areas. And they’ve built a recommendation engine that points these kinds of people towards each other based on their own preferences.

The result is that individuals are exposed to a much wider range of opinions, ideas and people than they would otherwise experience. And because this is done using their own interests, they end up being equally satisfied with the results (although not without a period of acclimitisation). “We nudge users to read content from people who may have opposite views, or high view gaps, in those issues, while still being relevant according to their preferences,” say Graells-Garrido and co....MORE